Brain-computer user interfaces are a revolutionary innovation that can aid paralyzed individuals reclaim features they have actually shed, like relocating a hand. These gadgets document signals from the mind and understand the customer’s designated activity, bypassing harmed or deteriorated nerves that would generally transfer those mind signals to regulate muscle mass.

Since 2006, demos of brain-computer user interfaces in people have actually largely concentrated on recovering arm and hand motions by allowing individuals to control computer cursors orrobotic arms Just recently, scientists have actually started establishing speech brain-computer interfaces to bring back interaction for individuals that can not talk.

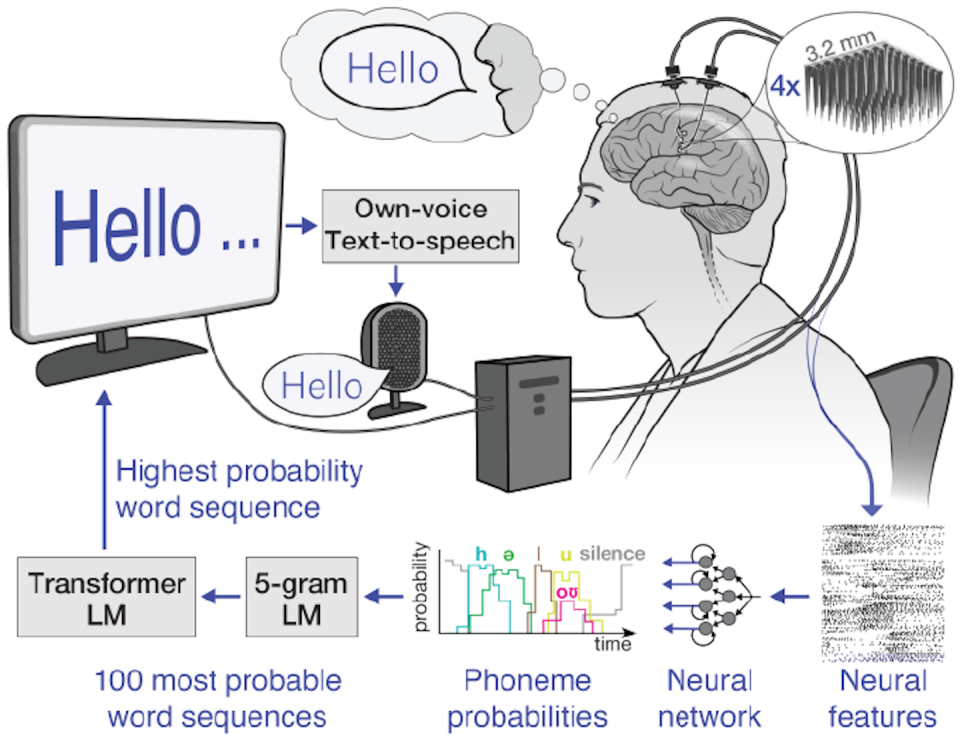

As the customer tries to chat, these brain-computer user interfaces tape the individual’s special mind signals connected with tried muscular tissue motions for talking and after that equate them right into words. These words can after that be presented as message on a display or talked out loud utilizing text-to-speech software program.

I’m a reseacher in the Neuroprosthetics Lab at the College of The Golden State, Davis, which becomes part of the BrainGate2 scientific test. My coworkers and I lately showed a speech brain-computer user interface that deciphers the attempted speech of a man with ALS, or amyotrophic side sclerosis, additionally called Lou Gehrig’s illness. The user interface transforms neural signals right into message with over 97% precision. Secret to our system is a collection of expert system language designs– fabricated semantic networks that aid analyze all-natural ones.

Recording mind signals

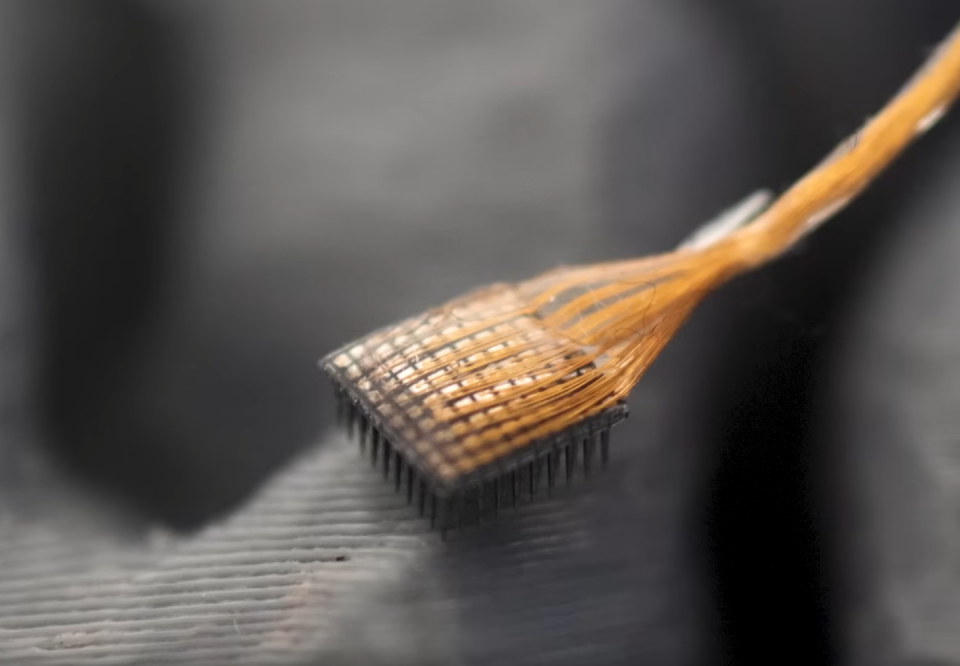

The primary step in our speech brain-computer user interface is taping mind signals. There are numerous resources of mind signals, several of which call for surgical procedure to document. Operatively dental implanted recording gadgets can catch top notch mind signals since they are positioned closer to nerve cells, causing more powerful signals with much less disturbance. These neural recording gadgets consist of grids of electrodes positioned on the mind’s surface area or electrodes dental implanted straight right into mind cells.

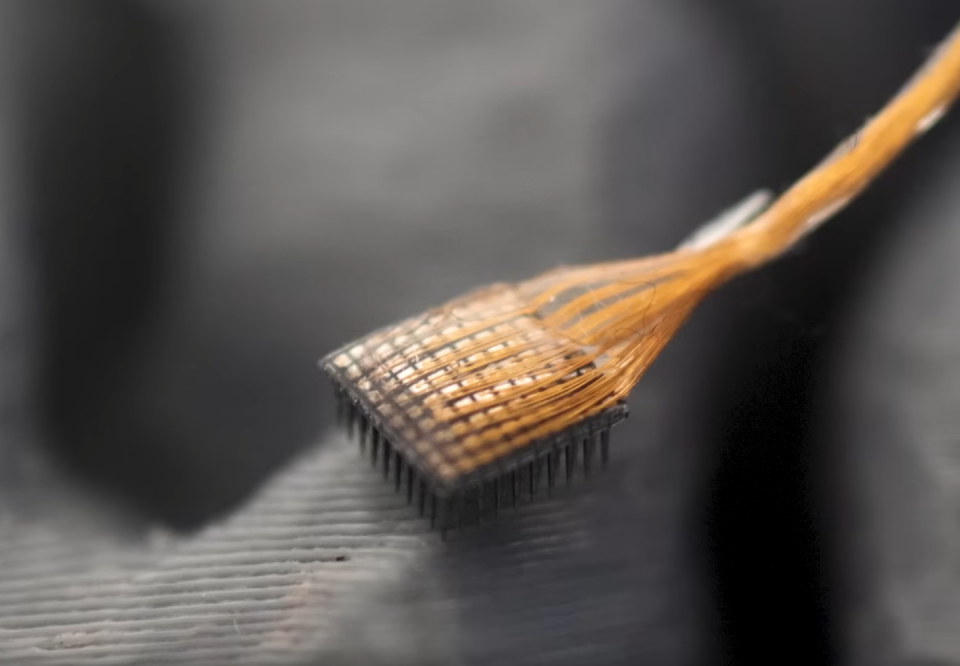

In our research, we utilized electrode selections operatively positioned in the speech electric motor cortex, the component of the mind that regulates muscle mass associated with speech, of the individual, Casey Harrell. We videotaped neural task from 256 electrodes as Harrell tried to talk.

Translating mind signals

The following difficulty is connecting the facility mind signals to words the customer is attempting to state.

One method is to map neural task patterns straight to talked words. This technique needs taping mind signals representing each word numerous times to determine the typical connection in between neural task and details words. While this method functions well for little vocabularies, as shown in a 2021 study with a 50-word vocabulary, it ends up being unwise for bigger ones. Envision asking the brain-computer user interface customer to attempt to state every word in the thesaurus numerous times– it might take months, and it still would not help brand-new words.

Rather, we make use of a different method: mapping mind signals to phonemes, the standard devices of noise that comprise words. In English, there are 39 phonemes, consisting of ch, emergency room, oo, pl and sh, that can be incorporated to develop any kind of word. We can determine the neural task connected with every phoneme numerous times simply by asking the individual to check out a couple of sentences out loud. By properly mapping neural task to phonemes, we can construct them right into any kind of English word, also ones the system had not been clearly educated with.

To map mind signals to phonemes, we make use of innovative maker discovering designs. These designs are especially fit for this job because of their capacity to locate patterns in huge quantities of complicated information that would certainly be difficult for people to determine. Consider these designs as super-smart audiences that can pick vital details from loud mind signals, just like you may concentrate on a discussion in a jampacked area. Utilizing these designs, we had the ability to understand phoneme series throughout tried speech with over 90% precision.

From phonemes to words

Once we have actually the analyzed phoneme series, we require to transform them right into words and sentences. This is difficult, particularly if the analyzed phoneme series isn’t completely precise. To fix this problem, we make use of 2 corresponding kinds of artificial intelligence language designs.

The very first is n-gram language designs, which anticipate which word is probably to adhere to a collection of n words. We educated a 5-gram, or five-word, language version on millions of sentences to anticipate the probability of a word based upon the previous 4 words, recording neighborhood context and usual expressions. As an example, after “I am great,” it may recommend “today” as most likely than “potato”. Utilizing this version, we transform our phoneme series right into the 100 probably word series, each with a connected chance.

The 2nd is huge language designs, which power AI chatbots and additionally anticipate which words probably adhere to others. We make use of huge language designs to fine-tune our selections. These designs, educated on large quantities of varied message, have a wider understanding of language framework and definition. They aid us figure out which of our 100 prospect sentences makes one of the most feeling in a broader context.

By meticulously stabilizing possibilities from the n-gram version, the huge language version and our first phoneme forecasts, we can make a very enlightened assumption concerning what the brain-computer user interface customer is attempting to state. This multistep procedure permits us to take care of the unpredictabilities in phoneme decoding and generate meaningful, contextually proper sentences.

Real-world advantages

In method, this speech decoding method has actually been incredibly effective. We have actually made it possible for Casey Harrell, a male with ALS, to “talk” with over 97% precision utilizing simply his ideas. This development permits him to conveniently speak with his friends and family for the very first time in years, done in the convenience of his very own home.

Speech brain-computer user interfaces stand for a substantial progression in recovering interaction. As we remain to fine-tune these gadgets, they hold the pledge of offering a voice to those that have actually shed the capacity to talk, reconnecting them with their enjoyed ones and the globe around them.

Nevertheless, obstacles stay, such as making the innovation extra easily accessible, mobile and long lasting over years of usage. Regardless of these obstacles, speech brain-computer user interfaces are an effective instance of exactly how scientific research and innovation can integrate to fix complicated troubles and significantly enhance individuals’s lives.

This short article is republished from The Conversation, a not-for-profit, independent wire service bringing you realities and credible evaluation to aid you understand our complicated globe. It was composed by: Nicholas Card, University of California, Davis

Find Out More:

Nicholas Card does not help, speak with, very own shares in or get financing from any kind of firm or company that would certainly gain from this short article, and has actually divulged no pertinent associations past their scholastic consultation.

Ferdja Ferdja.com delivers the latest news and relevant information across various domains including politics, economics, technology, culture, and more. Stay informed with our detailed articles and in-depth analyses.

Ferdja Ferdja.com delivers the latest news and relevant information across various domains including politics, economics, technology, culture, and more. Stay informed with our detailed articles and in-depth analyses.