Significant business are relocating at broadband to catch the assurances of expert system in medical care while medical professionals and specialists try to incorporate the innovation securely right into client treatment.

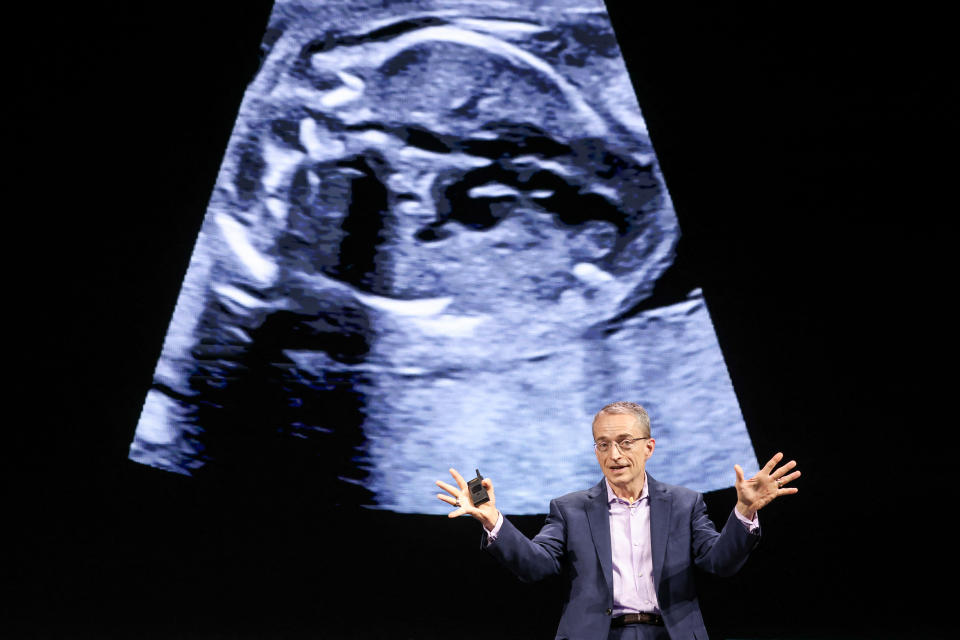

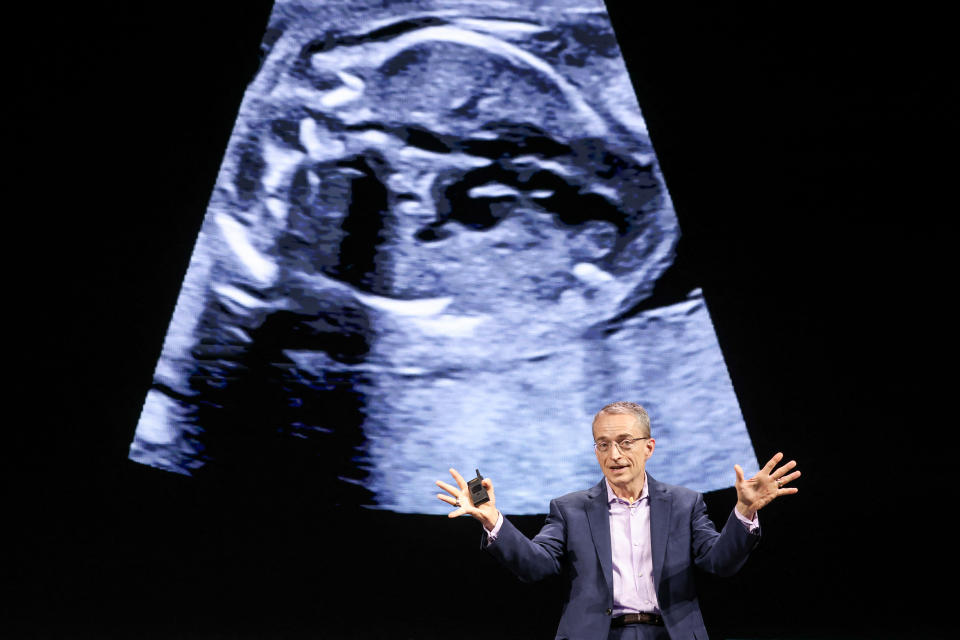

” Medical care is possibly one of the most impactful energy of generative AI that there will certainly be,” Kimberly Powell, vice head of state of medical care at AI equipment titan Nvidia (NVDA), which has actually partnered with Roche’s Genentech (RHHBY) to improve medication exploration in the pharmaceutical market, to name a few financial investments in medical care business, proclaimed at the business’s AI Top in June.

Various other technology names such as Amazon (AMZN), Google (GOOG, GOOGL), Intel (INTC), and Microsoft (MSFT) have actually likewise stressed AI’s capacity in medical care and landed collaborations targeted at enhancing AI designs.

Expanding need for a lot more effective medical care procedures has actually had technology business competing to establish AI applications that help with whatever from visit organizing and medication advancement to invoicing to aiding with analysis and analyzing scans.

The general market for AI in medical care is anticipated to expand to $188 billion by 2030 from $11 billion in 2021, according toPrecedence Research The marketplace for scientific software application alone is anticipated to enhance by $2.76 billion from 2023 to 2028, according to Technavio.

Specialists, on the other hand, are getting ready for the prospective technical change.

Sneha Jain, a primary other at Stanford College’s Department of Cardiovascular Medication, claimed that AI has the prospective to come to be as incorporated with the medical care system as the web while likewise worrying the relevance of making use of the innovation sensibly.

” Individuals wish to err on the side of care due to the fact that in the vow of ending up being a medical professional and for doctor, it’s, ‘Initially, do no damage’,” Jain informed Yahoo Money. “So exactly how do we ensure that we ‘First, do no damage’ while likewise truly pressing ahead and progressing the method AI is made use of in medical care?”

Prospective people appear careful: A current Deloitte Consumer Health Care survey located that 30% of participants specified they “do not rely on the details” offered by generative AI for medical care, contrasted to 23% a year back.

The worry: ‘Waste in, rubbish out’

Individuals appear to have factor to question AI’s existing abilities.

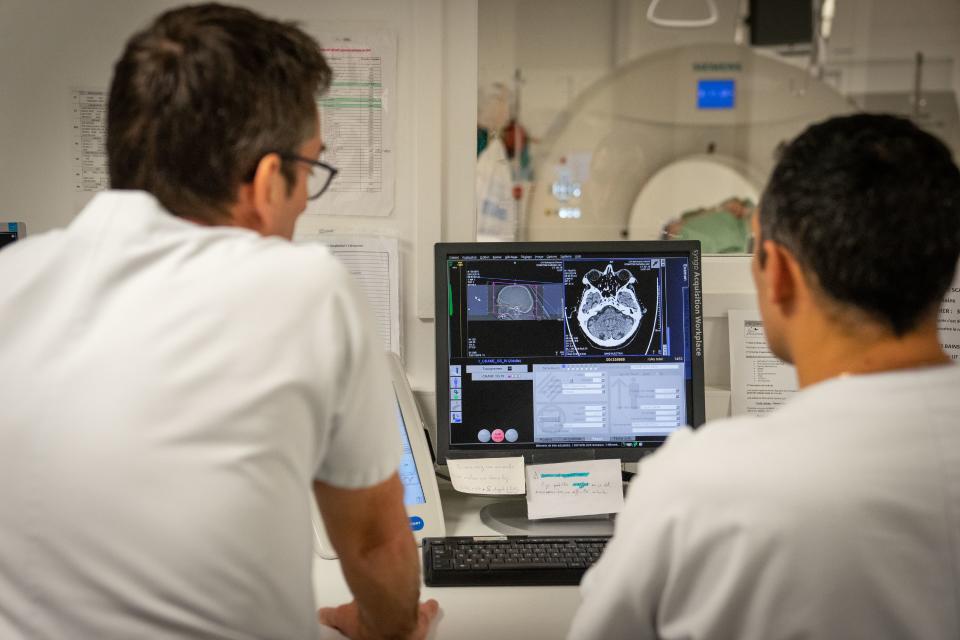

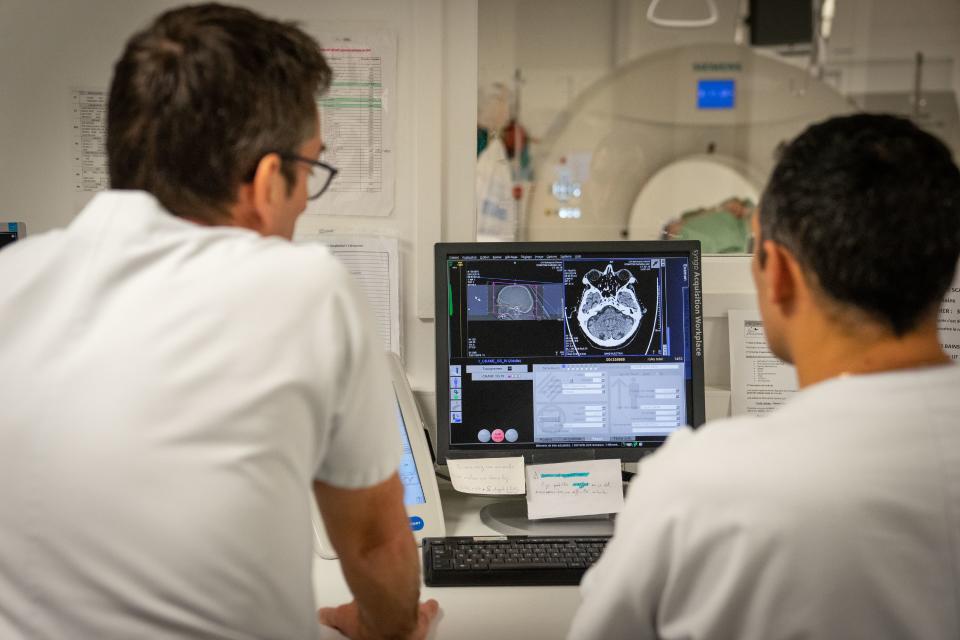

A study published in May reviewing huge multimodal designs (LMMs), which analyze media like photos and video clips, located that AI designs like OpenAI’s GPT-4V carried out even worse in clinical setups than arbitrary thinking when asked inquiries pertaining to clinical diagnosis.

” These searchings for emphasize the immediate demand for durable analysis methods to make certain the dependability and precision of LMMs in clinical imaging,” the research’s writers created.

Dr. Safwan Halabi, vice chair of imaging informatics at Lurie Kid’s Medical facility in Chicago, highlighted the trust fund concerns bordering the application of these designs by contrasting making use of AI in medical care without examining to making use of self-driving vehicles without carrying out appropriate examination drives.

Specifically, Halabi revealed worry concerning prejudice. If a design was educated making use of health and wellness information concerning Caucasians in Northern The golden state, he claimed, it might not have the ability to give suitable and precise like African Americans on the South Side of Chicago.

” The information is just comparable to its resource, therefore the worry is rubbish in, rubbish out,” Halabi informed Yahoo Money. He included that “great medication is sluggish medication” and worried the relevance of safety and security examinations prior to placing the innovation right into technique.

Yet others, like Dr. John Halamka, head of state of the innovation campaign Mayo Center System, stressed AI’s prospective to take advantage of the knowledge of countless medical professionals.

” Would not you like the experience of millions and countless client trips to be recorded in an anticipating formula to make sure that a medical professional could claim, ‘Well, yes, I have my training and my experience, however can I take advantage of the people of the past to aid me deal with people of the future in the very best feasible method?'” Halamka informed Yahoo Money.

Producing guardrails

Adhering To an executive order signed by President Biden last year to establish plans that would certainly progress the innovation while taking care of threats, Halamka and various other scientists have argued for standard, agreed-upon concepts to examine AI designs for prejudice, to name a few worries.

” AI, whether anticipating or generative, is mathematics not magic,” Halamka claimed. “We acknowledge the demand to construct a public-private cooperation, bringing federal government, academic community, and market with each other to develop the guardrails and the standards to make sure that any person that is doing medical care and AI has a means of measuring: Well, is this reasonable? Is it suitable? Is it legitimate? Is it efficient? Is it risk-free?”

They likewise worried the demand for a nationwide network of guarantee research laboratories– areas to evaluate the legitimacy and principles of AI designs. Jain goes to the facility of these conversations, as she’s beginning one such research laboratory at Stanford.

” The regulative setting, the social setting, and the technological setting are ripe for this sort of development on exactly how to consider guarantee and safety and security for AI, so it’s an interesting time,” she claimed.

Nevertheless, educating these designs likewise presents personal privacy worries, provided the delicate nature of a person’s clinical information, including their complete lawful name and day of birth.

While waiting on regulations around the innovation to be defined, Lurie Kid’s Medical facility has actually instituted its very own policies pertaining to AI utilize within its technique and has actually functioned to guarantee it does not reveal client details online.

” My forecasts are [that AI is] right here to remain, and we’re visiting increasingly more of it,” claimed Halabi, the vice chair of imaging informatics at Lurie. “Yet we will not truly see it due to the fact that it’s mosting likely to be taking place behind-the-scenes or it’s mosting likely to be taking place in the entire treatment procedure and not overtly revealed or divulged.”

Click on this link for thorough evaluation of the current health and wellness market information and occasions influencing supply costs

Ferdja Ferdja.com delivers the latest news and relevant information across various domains including politics, economics, technology, culture, and more. Stay informed with our detailed articles and in-depth analyses.

Ferdja Ferdja.com delivers the latest news and relevant information across various domains including politics, economics, technology, culture, and more. Stay informed with our detailed articles and in-depth analyses.