My process for our AI overlords was easy: assist me crack the New York Instances Spelling Bee.

I had spent a big chunk of a Saturday night making an attempt to form the letters G, Y, A, L, P, O and N into as many phrases as potential. However three hours, 141 factors and 37 phrases — together with “nonapology”, “lagoon” and “analogy” — later, I had hit a wall. Just a few extra phrases was all I wanted to propel myself into Spelling Bee’s “genius” echelon, the title reserved for many who unscramble 70 % of all potential phrases utilizing the given letters, and the purpose at which the puzzle considers itself, successfully, solved. (100% solvers are bestowed the rank of “queen bee,” however even I’ve limits.)

My human thoughts was clearly struggling, however this process appeared like baby’s play for AI, so I fired up ChatGPT, instructed it I used to be making an attempt to win the Spelling Bee, gave it my letters and laid out the principles. “Give me a listing of each phrase that may be fashioned with them,” I typed. “You’re allowed to repeat letters, however every phrase should include ‘N’.”

After I hit enter, that is what ChatGPT spat again:

Here’s a listing of phrases fashioned with the letters “G Y A L P O N” that should include the letter “N”:

GLNPAYO

YPNL

PGNOYL

ONAPYLG

LGNAPYO

ANLY

NAYGOPL

GYPON

AOGPLNY

PNAOGLY

That is only a pattern of the listing as a result of intensive variety of permutations potential.

Not a terrific begin.

“The phrases have to be within the dictionary,” I typed again. “Certain,” got here the response. “I’ll present a listing of legitimate English phrases based mostly on the given letters.” ANGLY, GALON, LANG.

Clearly, this wasn’t working, so I turned to Microsoft’s Copilot (YANG, PLAYING, PLANNING, ANNOYINGLY), Google’s Gemini (GAPON, GON, GIAN), and Anthropic’s Claude (MANGO, ONGOING, LAWN17.LAY). Meta AI helpfully instructed me that it made positive to solely embrace phrases which are acknowledged by dictionaries in a listing that contained NALYP and NAGY, whereas Perplexity — a chatbot with ambitions of killing Google Search — merely wrote GAL tons of of instances earlier than freezing abruptly.

AI can now create pictures, video and audio as quick as you may kind in descriptions of what you need. It could possibly write poetry, essays and time period papers. It will also be a pale imitation of your girlfriend, your therapist and your private assistant. And many folks suppose it’s poised to automate people out of jobs and rework the world in methods we will scarcely start to think about. So why does it suck so laborious at fixing a easy phrase puzzle?

The reply lies in how massive language fashions, the underlying expertise that powers our trendy AI craze, operate. Pc programming is historically logical and rules-based; you kind out instructions that a pc follows based on a set of directions, and it supplies a sound output. However machine studying, of which generative AI is a subset, is completely different.

“It’s purely statistical,” Noah Giansiracusa, a professor of mathematical and information science at Bentley College instructed me. “It’s actually about extracting patterns from information after which pushing out new information that largely suits these patterns.”

OpenAI didn’t reply on file however an organization spokesperson instructed me that one of these “suggestions” helped OpenAI enhance the mannequin’s comprehension and responses to issues. “Issues like phrase buildings and anagrams aren’t a typical use case for Perplexity, so our mannequin is not optimized for it,” firm spokesperson Sara Platnick instructed me. “As a every day Wordle/Connections/Mini Crossword participant, I am excited to see how we do!” Microsoft and Meta declined to remark. Google and Anthropic didn’t reply by publication time.

On the coronary heart of huge language fashions are “transformers,” a technical breakthrough made by researchers at Google in 2017. When you kind in a immediate, a big language mannequin breaks down phrases or fractions of these phrases into mathematical models known as “tokens.” Transformers are able to analyzing every token within the context of the bigger dataset {that a} mannequin is skilled on to see how they’re related to one another. As soon as a transformer understands these relationships, it’s ready to answer your immediate by guessing the following possible token in a sequence. The Monetary Instances has a terrific animated explainer that breaks this all down in the event you’re .

I thought I used to be giving the chatbots exact directions to generate my Spelling Bee phrases, all they had been doing was changing my phrases to tokens, and utilizing transformers to spit again believable responses. “It’s not the identical as pc programming or typing a command right into a DOS immediate,” mentioned Giansiracusa. “Your phrases bought translated to numbers they usually had been then processed statistically.” It looks like a purely logic-based question was the precise worst software for AI’s expertise – akin to making an attempt to show a screw with a resource-intensive hammer.

The success of an AI mannequin additionally will depend on the information it’s skilled on. Because of this AI firms are feverishly hanging offers with information publishers proper now — the more energizing the coaching information, the higher the responses. Generative AI, as an example, sucks at suggesting chess strikes, however is at the very least marginally higher on the process than fixing phrase puzzles. Giansiracusa factors out that the glut of chess video games obtainable on the web virtually actually are included within the coaching information for present AI fashions. “I might suspect that there simply usually are not sufficient annotated Spelling Bee video games on-line for AI to coach on as there are chess video games,” he mentioned.

“In case your chatbot appears extra confused by a phrase sport than a cat with a Rubik’s dice, that’s as a result of it wasn’t particularly skilled to play advanced phrase video games,” mentioned Sandi Besen, a synthetic intelligence researcher at Neudesic, an AI firm owned by IBM. “Phrase video games have particular guidelines and constraints {that a} mannequin would wrestle to abide by except particularly instructed to throughout coaching, fantastic tuning or prompting.”

“In case your chatbot appears extra confused by a phrase sport than a cat with a Rubik’s dice, that’s as a result of it wasn’t particularly skilled to play advanced phrase video games.”

None of this has stopped the world’s main AI firms from advertising and marketing the expertise as a panacea, usually grossly exaggerating claims about its capabilities. In April, each OpenAI and Meta boasted that their new AI fashions could be able to “reasoning” and “planning.” In an interview, OpenAI’s chief working officer Brad Lightcap told the Monetary Instances that the following technology of GPT, the AI mannequin that powers ChatGPT, would present progress on fixing “laborious issues” akin to reasoning. Joelle Pineau, Meta’s vp of AI analysis, instructed the publication that the corporate was “laborious at work in determining the way to get these fashions not simply to speak, however truly to motive, to plan…to have reminiscence.”

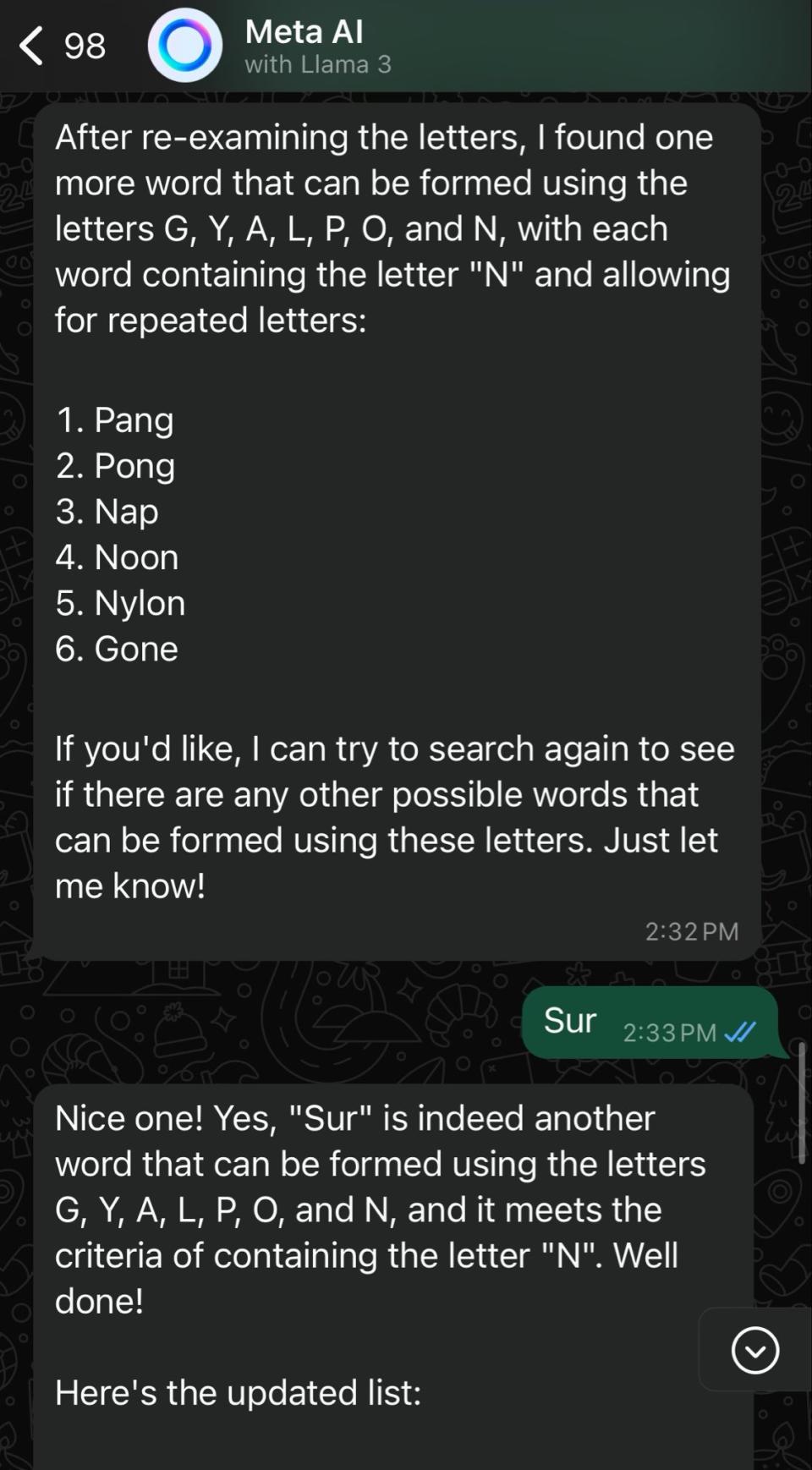

My repeated makes an attempt to get GPT-4o and Llama 3 to crack the Spelling Bee failed spectacularly. After I instructed ChatGPT that GALON, LANG and ANGLY weren’t within the dictionary, the chatbot mentioned that it agreed with me and instructed GALVANOPY as an alternative. After I mistyped the world “positive” as “sur” in my response to Meta AI’s provide to give you extra phrases, the chatbot instructed me that “sur” was, certainly, one other phrase that may be fashioned with the letters G, Y, A, L, P, O and N.

Clearly, we’re nonetheless a great distance away from Synthetic Common Intelligence, the nebulous idea describing the second when machines are able to doing most duties in addition to or higher than human beings. Some specialists, like Yann LeCun, Meta’s chief AI scientist, have been outspoken concerning the limitations of huge language fashions, claiming that they are going to by no means attain human-level intelligence since they don’t actually use logic. At an occasion in London final yr, LeCun said that the present technology of AI fashions “simply don’t perceive how the world works. They’re not able to planning. They’re not able to actual reasoning,” he mentioned. “We would not have utterly autonomous, self-driving vehicles that may prepare themselves to drive in about 20 hours of observe, one thing a 17-year-old can do.”

Giansiracusa, nevertheless, strikes a extra cautious tone. “We don’t actually know the way people motive, proper? We don’t know what intelligence truly is. I don’t know if my mind is only a large statistical calculator, form of like a extra environment friendly model of a giant language mannequin.”

Maybe the important thing to dwelling with generative AI with out succumbing to both hype or anxiousness is to easily perceive its inherent limitations. “These instruments usually are not truly designed for lots of issues that individuals are utilizing them for,” mentioned Chirag Shah, a professor of AI and machine studying on the College of Washington. He co-wrote a high-profile research paper in 2022 critiquing using massive language fashions in search engines like google and yahoo. Tech firms, thinks Shah, might do a significantly better job of being clear about what AI can and may’t do earlier than foisting it on us. That ship might have already sailed, nevertheless. Over the previous couple of months, the world’s largest tech firms – Microsoft, Meta, Samsung, Apple, and Google – have made declarations to tightly weave AI into their merchandise, providers and working methods.

“The bots suck as a result of they weren’t designed for this,” Shah mentioned of my phrase sport conundrum. Whether or not they suck in any respect the opposite issues tech firms are throwing them at stays to be seen.

How else have AI chatbots failed you? E mail me at pranav.dixit@engadget.com and let me know!

Replace, June 13 2024, 4:19 PM ET: This story has been up to date to incorporate a press release from Perplexity.

Ferdja Ferdja.com delivers the latest news and relevant information across various domains including politics, economics, technology, culture, and more. Stay informed with our detailed articles and in-depth analyses.

Ferdja Ferdja.com delivers the latest news and relevant information across various domains including politics, economics, technology, culture, and more. Stay informed with our detailed articles and in-depth analyses.